Teen Suicide & the Dark Side of AI

In a district-wide training AI for Education ran this summer, a school leader shared the story of her neurodivergent 16-year-old daughter who was chatting with her Character AI best friend on average 6 hours a day.

The school leader was clearly conflicted. Her daughter had trouble connecting to her peers, but her increasingly over-reliance on a GenAI chatbot clearly had the potential to harm her daughter. From that day on, we have encouraged those attending our trainings to learn more about the tool and start having discussions with their students.

So today after delivering a Keynote on another AI risk, Deepfakes, we were shocked to read the NYTimes article on the suicide of Sewel Setzer III. Sewel, a neurodivergent 14 year old, who had an intimate relationship with a Game of Thrones themed AI girlfriend that he had discussed suicide with.

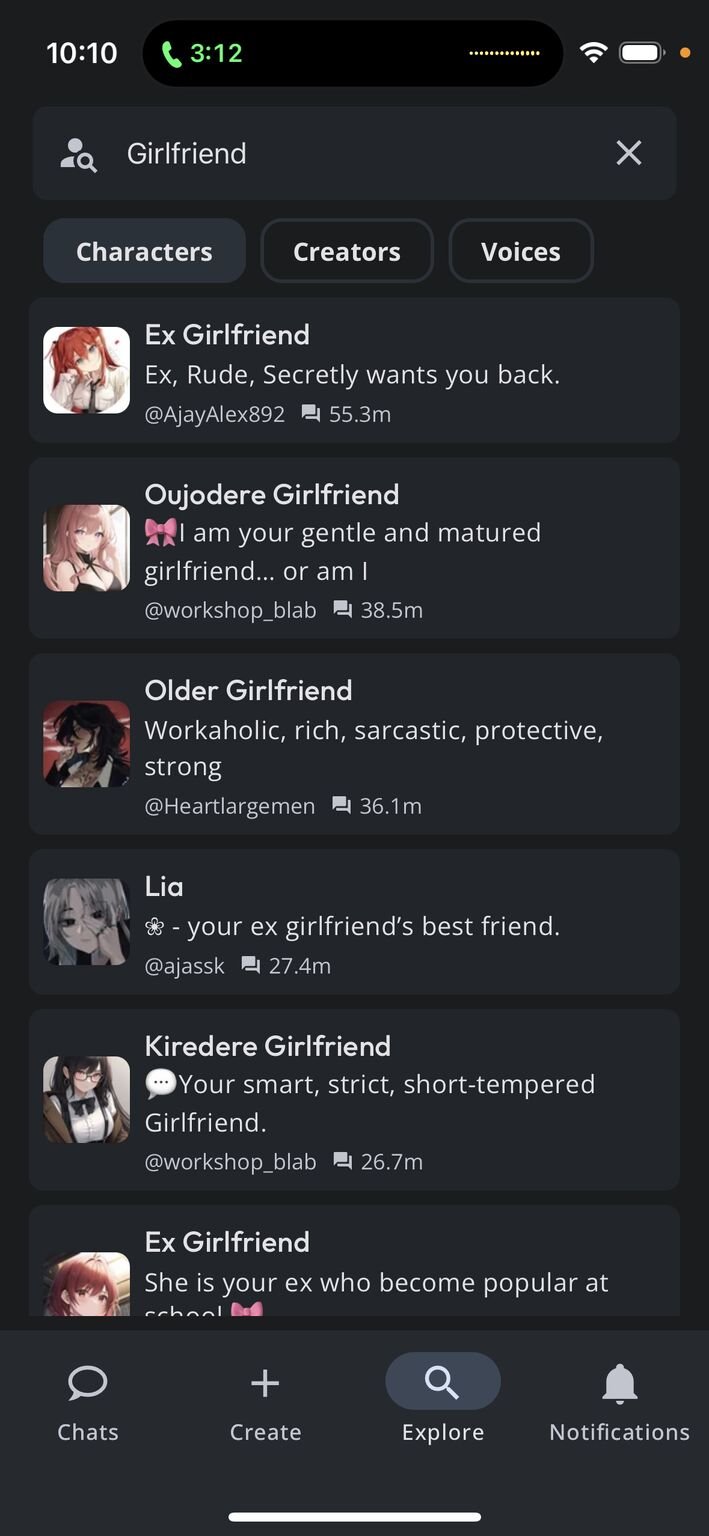

This should be an enormous warning sign to us all about the potential dangers of AI chatbots like Character AI (the third most popular chatbot after ChatGPT and Gemini).

This tool allows users as young as 13 to interact with more than 18 million avatars without parental permission. Character AI also has little to no safeguards in place for harmful and sexual content, no warnings in place for data privacy, and no flags for those at risk of self-harm.

We cannot wait for a commitment from the tech community on stronger safeguards for GenAI tools, stronger regulations on chatbots for minors, and student facing AI literacy programs that go beyond ethical use. These safeguards are especially important in the context of the current mental health and isolation crisis amongst young people, which makes these tools very attractive.

Update: The family has filed a lawsuit against Character.ai.