Stanford’s AI-Assisted Tutoring Study

A new study from Stanford released today suggests that AI-assisted tutoring, when designed to support rather than replace human tutors, can have a moderately positive impact on student learning outcomes.

Study Overview:

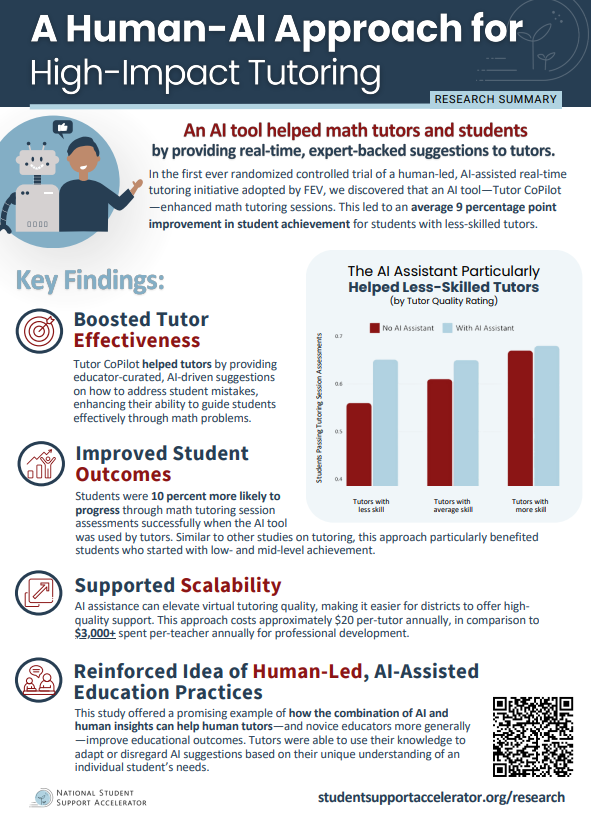

Stanford researchers conducted a randomized controlled trial of about 1,000 students and 900 tutors to examine the effectiveness of an AI-powered digital tutoring assistant called Tutor CoPilot

Tutor CoPilot provides educator-curated, AI-driven suggestions on how to address student mistakes

Tutor CoPilot showed promise in improving students' short-term performance in math. The impact was particularly significant for students with less-skilled tutors, showing a 9% improvement in student achievement.

Tutors using the AI tool were more likely to ask helpful questions and less likely to give students direct answers.

The estimated cost to run the AI system is approximately $20 per tutor annually based on the two month study

Similar to other tutoring studies, this approach particularly benefited students who started with low- and mid-level achievement.

When asked to review the study by The 74 (thanks Greg Toppo), I shared these thoughts:

Intelligent tutoring is the holy grail for tech and education with the Bloom's Two Sigma problem (even though the results have never been replicated). From Khanmigo to Sam Altman mentioning a tutor for everyone in his Intelligence Age post, it is a big push for EdTech companies.

Great to see a well created study on GenAI impact on students and tutors, and I would love to see more in the space. The focus on economically disadvantaged, minority students, and ELs was refreshing to see.

For the results, there really isn't anything too substantial/statistically significant except for the impact on the lowest rated tutors. This skill gap closing has been mirrored in other studies in industries like consulting (BCG papers), so a lot of signs pointing to this as a first major benefit of GenAI. The students in the treatment group did not improve their final assessment scores, so there was minimal impact from the 2 month study.

Most of the use of the chatbot support was during the "meat" of the tutoring, e.g., problem solving and explanations. Which is where there is the most benefit of a tool as the technology advances.

The key issues identified by the tutors were the lack of leveling to student grade/age, which should get better with models that can reason.

The design prioritized student privacy, which was great, but also limited the quality of the support to the tutor as the context was only the last 10 responses.

Check out the full study and one pager.

Check out the review piece on the study by The 74.

Check out Wharton’s recent study that found that students who use AI tutors with no safeguards may begin to over-rely on the tools and perform worse when access is removed.