OpenAI's SimpleQA: A Reality Check on AI Hallucinations

Large language models like ChatGPT are very good at convincing users their outputs are correct when they make mistakes all the time. Just this week, an education leader in Alaska was in the news because she used GenAI to create a new cellphone policy that included academic studies that did not exist. (Read the article here.)

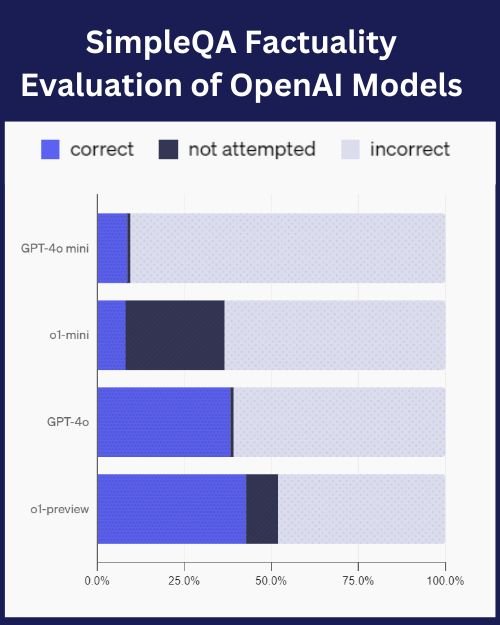

To help combat these inaccuracies (called hallucinations), OpenAI just introduced their new open-source benchmark, SimpleQA. By focusing on short, fact-seeking queries, SimpleQA’s goal is to assess the “factuality” of language models and help drive “research on more trustworthy and reliable AI.”

The benchmark:

- Double-verifies answers by independent AI trainers

- Includes questions that span multiple topics (science, politics, arts, sports, etc.)

- Grades responses as "Correct," "Incorrect," or "Not attempted"

- Measures both accuracy of the answers and the model's confidence in it's answer

Key Findings:

- Larger models show better accuracy in outputs, but there is still significant issues with accuracy

- Models tend to overstate their confidence

- Higher response consistency correlates with better accuracy

The fact that even advanced models like GPT-4 score BELOW 40% on SimpleQA illuminates the concerning gap in AI systems' ability to provide reliable factual information.

While the benchmark's narrow focus on short, fact-seeking queries with single, verifiable answers has clear limitations, we are encouraged to see that it at least establishes a measurable baseline for assessing a language model's level of factual accuracy.

Hopefully, this metric is a starting place that serves as both a reality check and a catalyst for developing more trustworthy AI models.